wespiva - Web Spider Validator

Web Spider Validator, short named wespiva, is a mix of a

- Web-Spider (Robot, Crawler) , which traverses between web pages linked together,

- and an XHTML-Validator, which proofs whether a page contains valid tags, attributes and allowed attribute-values.

Description

The purpose of this tool is to ensure high-quality

standard-complying websites.

With

xenu's link sleuth

there is a great tool for spidering

and finding dead links, but it does not validate a page.

With the

w3.org-Validator

there is a great validation-tool,

but it only checks a single page,

and is often overloaded and slow.

The solution to overcome these restrictions is wespiva,

which spiders and

validates

in one rush.

This tool assists in the transition of bigger sites to XHTML.

Download

Click here to

download wespiva

Version 4.2024-04-05

(746 kb 7z file, 2024-04-05)

In spite of being programmed not to harm any computer, there is a chance of a crash by accident or programming-error in the application or one of the .NET-functions used by it. In order not to be held liable for any negative circumstances resulting of the usage of this program (like time losses, data loss, wrong reports, etc.), you may only use the program when accepting the following rules:

- You backup your system regularly

- You will not make me responsible for damages (lost time, crashed computer, etc.), if the damage is not provoked intentionally.

Installation

Prerequisites

wespiva runs on Windows with .NET Framework 4.0 installed.

How to run

Just unzip the single file in the zip-archive

and start using it.

Frequently asked questions for wespiva

- Does it run on MONO for Windows?

- A special version runs on Mono 2.2, but hangs when resizing the form while wespiva is spidering. The reason is unknown, possibly Mono has some bugs with Windows.Forms and Multithreading. If you don't touch the App until the scan is over, all is well.

- Will there be a MONO-Version for Linux/OS-X?

- Probably yes—if someone pays for it. If no one would pay for it, there is no big demand for it.

- How much pages could be checked in one run?

- I've used it to check sites with more than 50.000 elements in less than 15 minutes. The duration depends mainly on the line-speed and responsiveness of the page-delivering web server.

- Why Validation?

- I'll let others speak here:

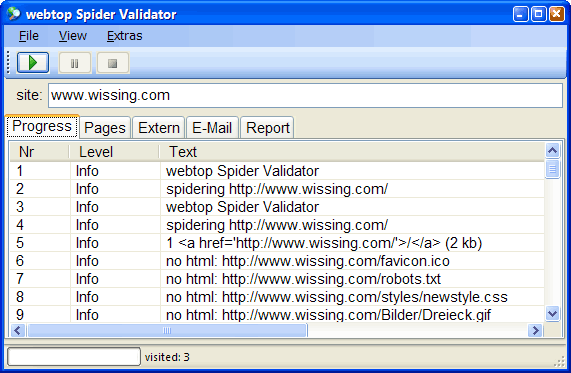

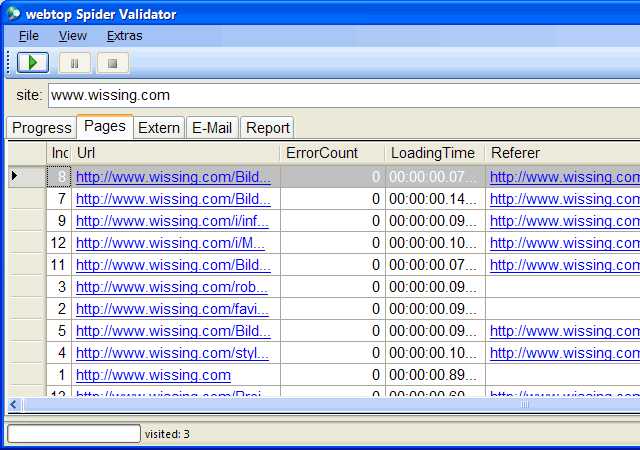

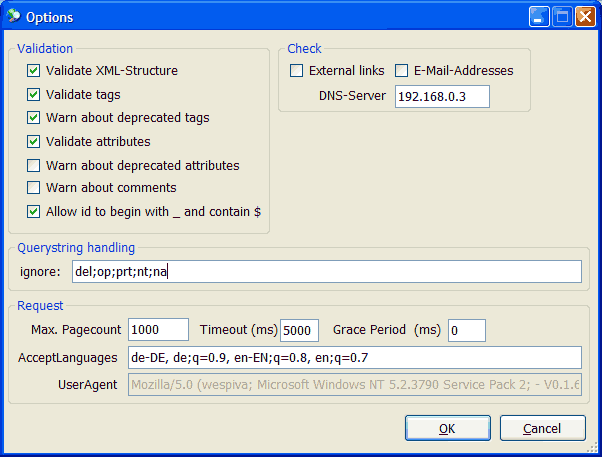

Samples

Features

- easy to use

- easy to install (just a single exe file)

- wespiva could be used in an intranet, no internet needed for validation

- fast (could check over 50.000 elements in less than 15 minutes)

- detects dead links

- finds validation issues

- generates easy to understand reports

- generates a sitemap in the standard-sitemap-format

- could be called by command-line for automated periodically checking of a site

- Spidering and validation is done in a background-thread, the GUI stays responsive

- comfortable configuration, for example a grace-period could be set

- can check all pictures found:

- Is the picture compression optimal? You can set desired quality level and minimum savings for to be reported

- Is the picture correct, can it be loaded without any format problems?

- Does the picture contain many metadata, which is possibly an undesired information leak?

runnable from command-line

c:\wespiva.exe "www.wissing.com" "example@example.not"

Known Bugs

- Not all HTML5 tags and attributes were checked - this is, as HTML5, a permanent work in progress

-

Sites using the tag

baseare not supported.

Future Features

- https-Support

- Online-Version

- Thumbnail of every web-page and graphic resources

- checking of inline-Anchor-Hrefs (like #top)

Already done:

- Multi-Threading (for other than the GUI)

- JavaScript-Extraction

- robots.txt conformance

- Basic Authentication

- X.509 Certificates

- Proxy-Support

- Integration into our CMS

- Text-Extraction

- Style/CSS-Extraction

History / Changes

- 2012-06-29, Cache-setting of pictures is checked, header should contain max-age or expires with at least 48 hours of caching

- 2012-06-28, New:

- HTML5

More HTML5 tags and attributes are checked, some existing checks were refined and extended. -

Agile HTML parser as fallback

The parser falls from XML parsing back to an "agile" html mode, in order to check even non-XML conform HTML. (based on HTML Agility Pack). - Picture check

After a validation run you can call the new "check images" function. This checks all pictures found if they are valid. It checks if these pictures can be compressed further, you can set the quality level and minimal savings. If a picture contains "many" meta data items, this fact is reported. - The flickering when adding new lines to the result table is gone.

- wespiva is now compiles for .NET 4.0 instead 3.5

- HTML5

- 2011-06-08, Redirecting corrected, provisional HTML5 support, save/load website url into/from project file

- 2010-01-27, some minor errors eliminated, extracting JavaScript, Multithreaded validation

- 2008-12-19, version 0.1.9: robots.txt, parsing error eliminated

Other nice Validators

They are really good, but don't let you check whole sites: